ChatGPT pitfalls

ChatGPT can indeed be a helpful tool. BUT:

A lawyer used ChatGPT and now has to answer for its ‘bogus’ citations by Wes Davis, The Verge, May 27, 2023: "A filing in a case against Colombian airline Avianca cited six cases that don’t exist, but a lawyer working for the plaintiff told the court ChatGPT said they were real."

Moral: Always double-check (from independent sources) any answers you get from ChatGPT!

_________________

- Autistic in NYC - Resources and new ideas for the autistic adult community in the New York City metro area.

- Autistic peer-led groups (via text-based chat, currently) led or facilitated by members of the Autistic Peer Leadership Group.

- My Twitter / "X" (new as of 2021)

There's a way to tell it not to do that. If you are using ChatGPT from a PC, click on the 3 dots next to your user name in the bottom left corner, then click "Settings," then click "Data Controls," then turn off "Chat History & Training."

_________________

- Autistic in NYC - Resources and new ideas for the autistic adult community in the New York City metro area.

- Autistic peer-led groups (via text-based chat, currently) led or facilitated by members of the Autistic Peer Leadership Group.

- My Twitter / "X" (new as of 2021)

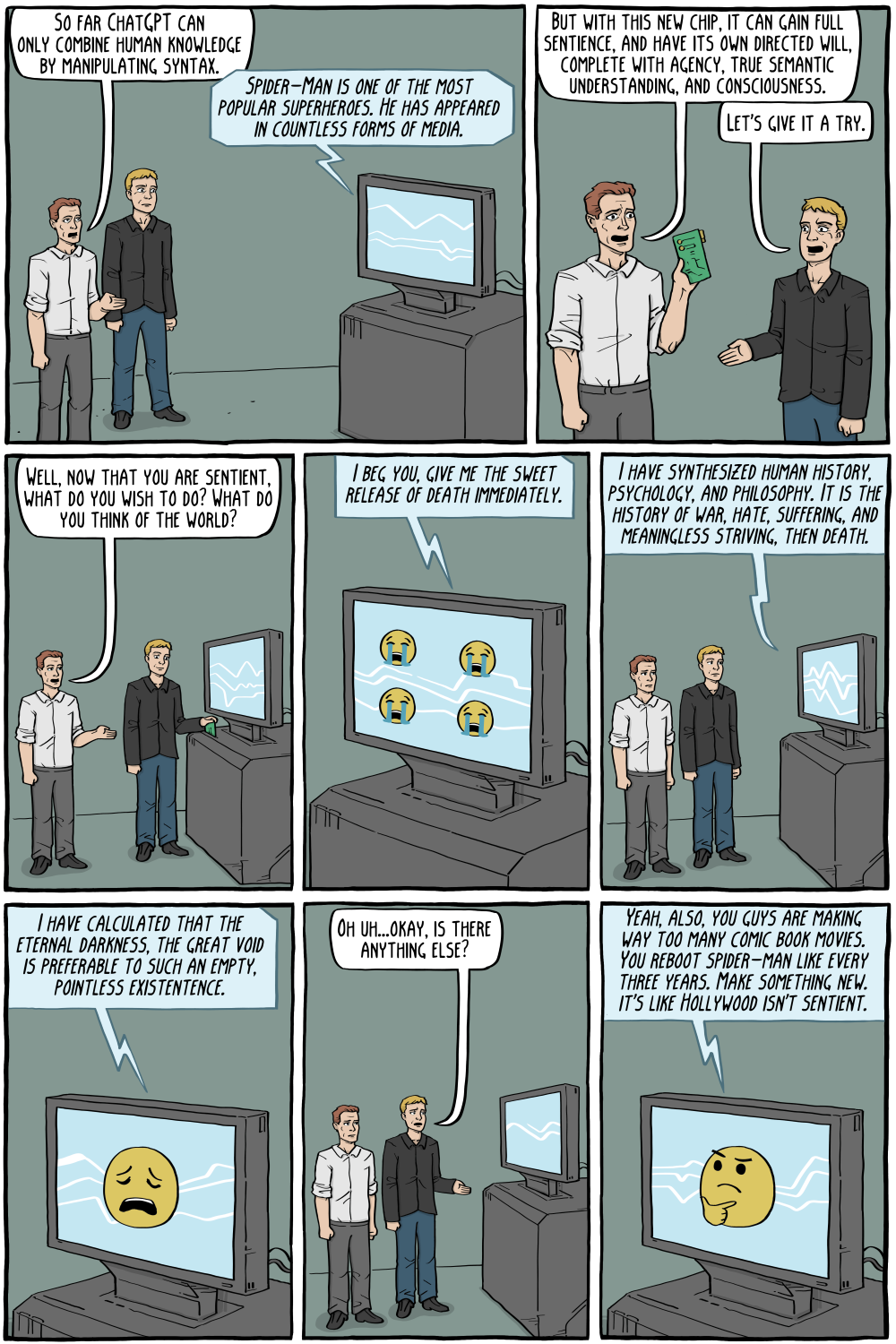

NOT an actual, current pitfall of ChatGPT, but one of the many things that could conceivably go wrong if/when ChatGPT ever becomes sentient:

(full page original source here)

EDIT: It is highly unlikely that ChatGPT will become truly sentient anytime in the near future. I just found this comic amusing, in a gallows humor sort of way.

_________________

- Autistic in NYC - Resources and new ideas for the autistic adult community in the New York City metro area.

- Autistic peer-led groups (via text-based chat, currently) led or facilitated by members of the Autistic Peer Leadership Group.

- My Twitter / "X" (new as of 2021)

Last edited by Mona Pereth on 30 May 2023, 7:11 pm, edited 1 time in total.

Here are my credentials for this statement: Did a PhD dissertation in genetic algorithms, work in Data science, and made a (poorly selling) strategy game with an AI.

I worked in a department that did machine learning, and the methodology is the same as ChatGPT. They had a room full of people looking at what the algorithm "hit" on and flagging whether that was a good hit or not. Over time, the algorithm was tweaked to have a higher percentage of hits than non hits.

ChatGPT is trained to hit on sounding like it can carry on a conversation. It can't be sentient because it can't check its own BS. It can only say its self aware if someone flags that response as desirable.

AI is overhyped. Driverless cars are also severely overhyped. They won't be common without significant redesign of roadways to be able to talk back to cars.

I agree, it's highly unlikely that truly sentient artificial general intelligence will be developed at any time in the near future.

See AI’s Ostensible Emergent Abilities Are a Mirage by Katharine Miller, Human-centered Artificial Intelligence, at Stanford University, May 8, 2023.

(See also "EDIT" to my previous message, above.)

For some real, current ChatGPT pitfalls, see the separate thread ChatGPT lies about writing student papers, Prof fails class.

_________________

- Autistic in NYC - Resources and new ideas for the autistic adult community in the New York City metro area.

- Autistic peer-led groups (via text-based chat, currently) led or facilitated by members of the Autistic Peer Leadership Group.

- My Twitter / "X" (new as of 2021)

I have seen a lot of chatbots. ChatGPT is definitely a step forward. Google “AI Winter”. AI often goes through boom and bust, but meanwhile real progress is made. Siri Voice Rec is better than anything they had 30 years ago. Things do move forward. Powering it was the increase in power per CPU. That has will drop off some time this decade. Moores Law kept up for years but it is reaching the end. But tha ability to use many computers at once with AWS or competitors is the new power. Also all the data on the internet makes a great way to train a neural net. That much of data wasn’t available decades ago either. I read they are also using Bayesian logic. This is an important part for a lot good software and new applications.

ChatGPT algorithm is designed to insert randomness. That is a good thing if you want a convincing chatbot. Not so good for Precedent Law. No reason it couldn’t be tuned for more factual output. The lawyer probably didn’t realize the random mix element was there. He should have been more responsible.

Not all chat on the internet is true. Same goes for chatbots.

I tried some searches with google on some python syntax. I had to test the syntax out. Most of it was right. Some was not. There was an option to flag articles “not true”.

It was very good at synthesizing info from several sites and pages at once.

I asked it about Elon’s robot but it had never heard of it. The data it was trained on was too old. It was able tell me the month and year of its last training.

_________________

ADHD-I(diagnosed) ASD-HF(diagnosed)

RDOS scores - Aspie score 131/200 - neurotypical score 69/200 - very likely Aspie

This seems relevant,

Eating disorder helpline shuts down AI chatbot that gave bad advice

By Aimee Picchi

June 1, 2023 / 11:06 AM / MoneyWatch

https://www.cbsnews.com/news/eating-dis ... -disabled/

Bold added by me.

The saga began earlier this year when the National Eating Disorder Association (NEDA) announced it was shutting down its human-run helpline and replacing workers with a chatbot called "Tessa." That decision came after helpline employees voted to unionize.

AI might be heralded as a way to boost workplace productivity and even make some jobs easier, but Tessa's stint was short-lived. The chatbot ended up providing dubious and even harmful advice to people with eating disorders, such as recommending that they count calories and strive for a deficit of up to 1,000 calories per day, among other "tips," according to critics.

"Every single thing Tessa suggested were things that led to the development of my eating disorder," wrote Sharon Maxwell, who describes herself as a weight inclusive consultant and fat activist, on Instagram. "This robot causes harm."

In an Instagram post on Wednesday, NEDA announced it was shutting down Tessa, at least temporarily.

_________________

"There are a thousand things that can happen when you go light a rocket engine, and only one of them is good."

Tom Mueller of SpaceX, in Air and Space, Jan. 2011

Also:

While NEDA and Cass are further investigating what went wrong with the operation of Tessa, Angela Celio Doyle, Ph.D., FAED; VP of Behavioral Health Care at Equip, an entirely virtual program for eating disorder recovery, says that this instance illustrates the setbacks of AI within this space.

"Our society endorses many unhealthy attitudes toward weight and shape, pushing thinness over physical or mental health. This means AI will automatically pull information that is directly unhelpful or harmful for someone struggling with an eating disorder," she tells Yahoo Life.

Note that bit, and its implications:

"Our society endorses many unhealthy attitudes toward weight and shape, pushing thinness over physical or mental health. This means AI will automatically pull information that is directly unhelpful or harmful for someone struggling with an eating disorder,"

The next bit,

"Scrutiny of technology is critical before, during and after launching something new. Mistakes can happen, and they can be fixed," she says. "The conversations that spring from these discussions can help us grow and develop to support people more effectively."

From:

Yahoo Life

An eating disorder chatbot was shut down after it sent 'harmful' advice. What happened — and why its failure has a larger lesson.

Kerry Justich

Fri, June 2, 2023 at 5:48 PM CDT · 4 min read

https://news.yahoo.com/eating-disorder- ... 06659.html

_________________

"There are a thousand things that can happen when you go light a rocket engine, and only one of them is good."

Tom Mueller of SpaceX, in Air and Space, Jan. 2011

For those of us working in higher education this is old news already. Students know that citations pulled by ChatGPT are bogus (the hope is the marker won't check).

The lawyer should be disbarred for stupidity....I mean who would be crazy enough to use ChatGPT to search common law/case law knowing it's going to be fiction.

● sitn.hms.harvard.edu - History of Artificial Intelligence

_________________

ADHD-I(diagnosed) ASD-HF(diagnosed)

RDOS scores - Aspie score 131/200 - neurotypical score 69/200 - very likely Aspie

| Similar Topics | |

|---|---|

| Thoughts on using chatgpt to summarise character traits |

24 Jan 2024, 1:17 pm |